Immersive Navigation in XR

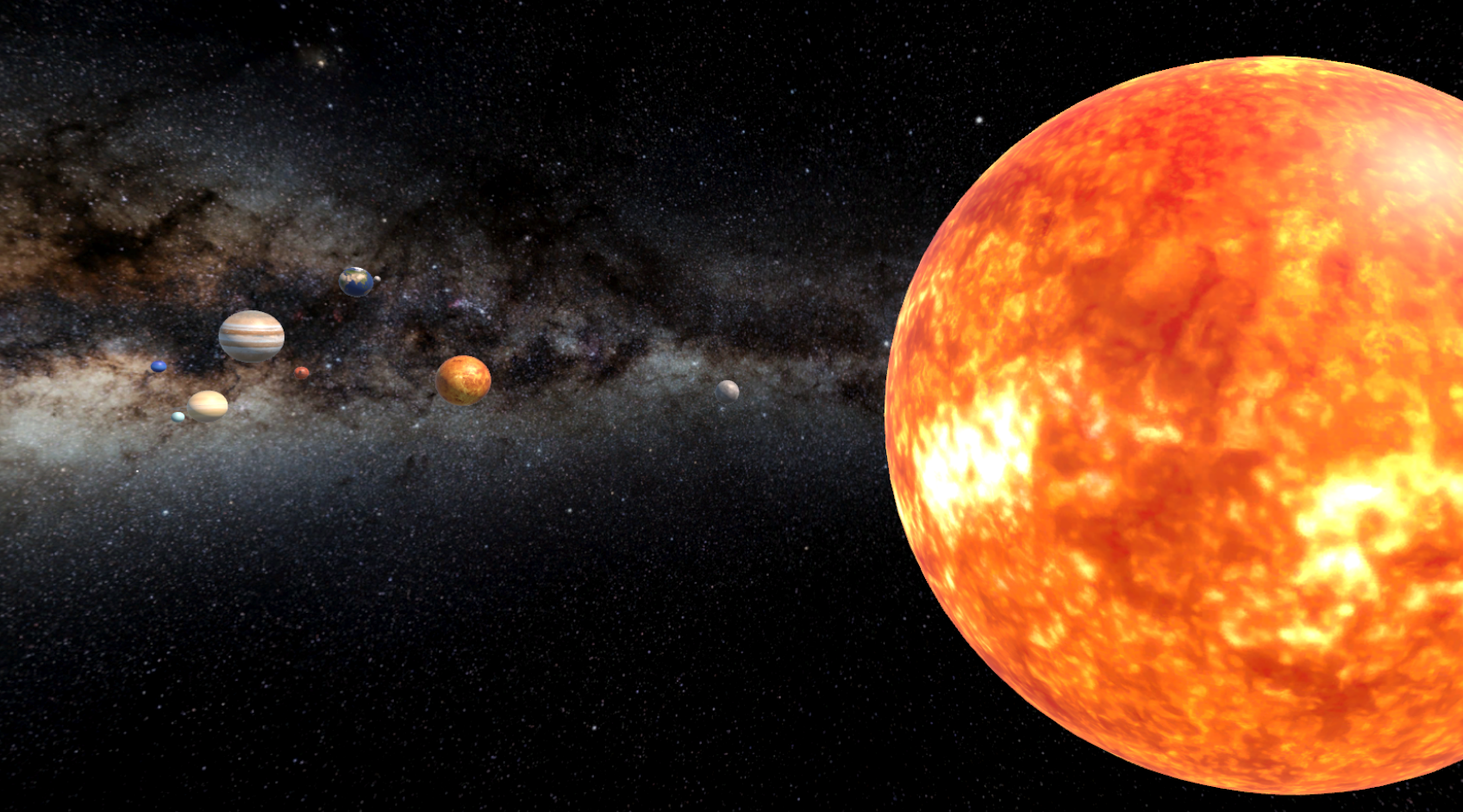

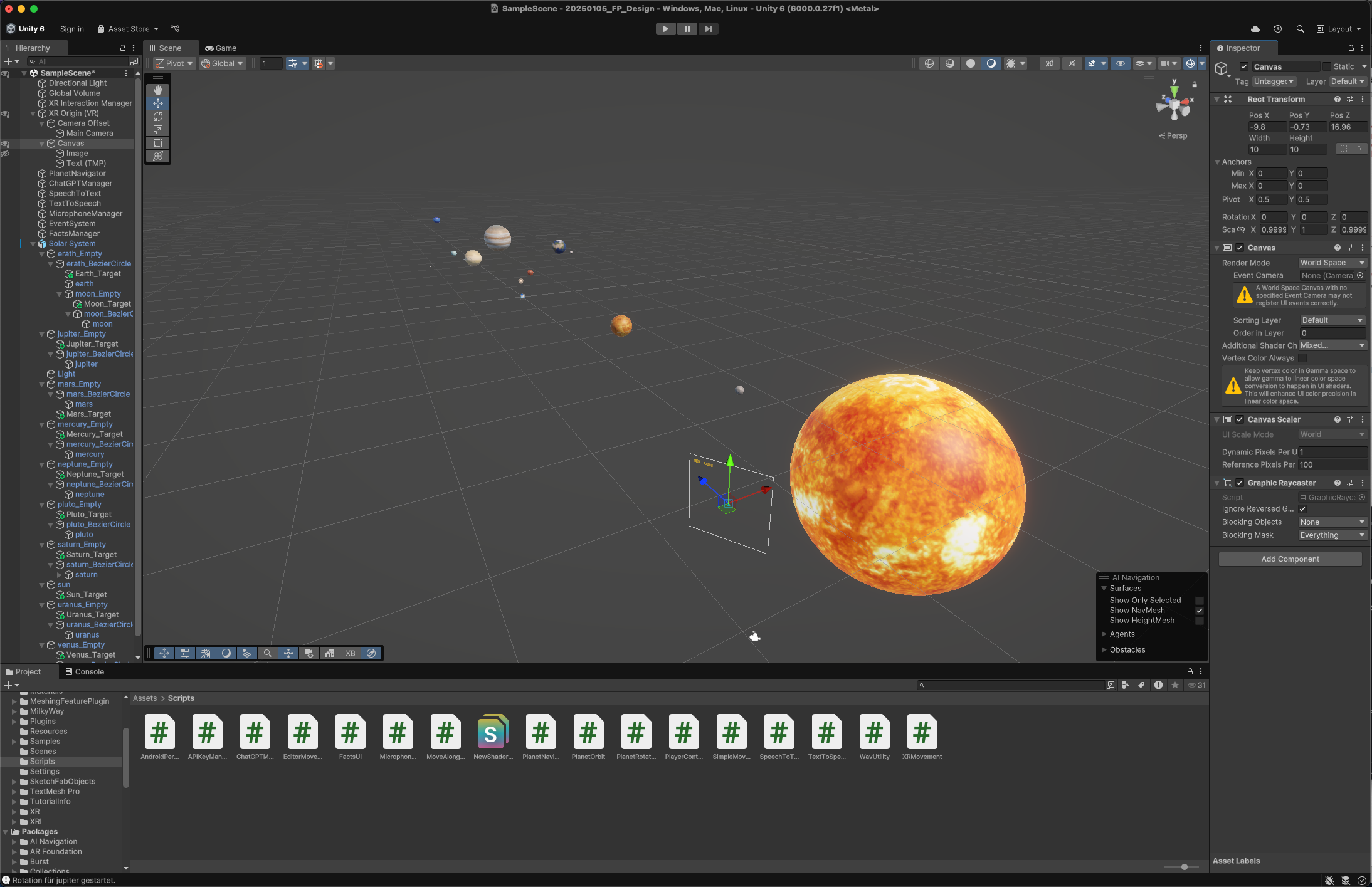

This project explores new ways to navigate XR environments, focusing on a simple, intuitive, and immersive user experience. At the heart of this experience is “Cosmo,” a voice-controlled assistant that uses OpenAI’s ChatGPT API for natural language interaction and to provide information about the solar system. The entire experience was developed using Unity.

Core Features

- Voice-driven exploration: Navigate the virtual solar system effortlessly using voice commands, allowing hands-free interaction and a natural flow through the experience

- Conversational assistant: The AI-powered guide understands natural language, making communication simple and engaging without requiring rigid command phrases

- Immersive movement design: Users experience smooth, continuous movement along curved paths rather than abrupt jumps, enhancing the feeling of floating through space

- Real-time environment awareness: The system continuously tracks the user’s position relative to planets to enable context-sensitive responses and interactions

- Flexible architecture: Built for easy extension, the project supports adding new planets and interactive features without major restructuring, encouraging further innovation

Interactive Behaviors

Beyond basic navigation, several subtle interactive elements were added to enhance the immersive experience:

- Planet rotation: When the user arrives at a planet, it begins to slowly rotate, adding life and motion to the environment

- Contextual stop: Once the user leaves the planet’s proximity, the rotation automatically stops to signal disengagement

- Smooth transitions: Movement between planets follows a curved trajectory for a natural, floating sensation in space

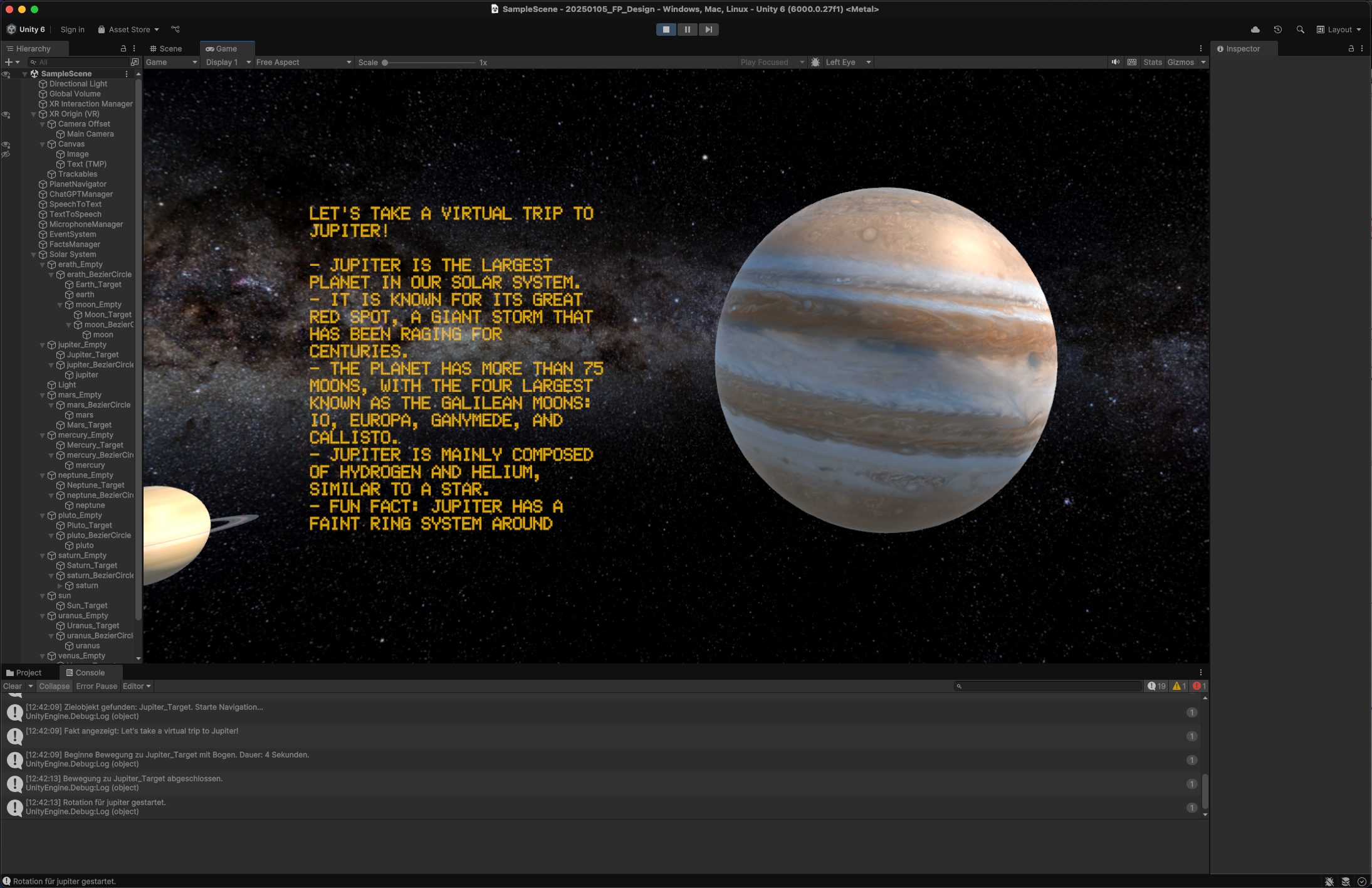

- Arrival messaging: On arrival, the assistant says where you are and invites questions. Facts are only provided upon user request

- Zone detection: Each planet has an invisible collider zone that detects when the player enters or exits

Project Showcase

This short video demonstrates how Cosmo assists the user in navigating the solar system using voice commands. It highlights the interaction flow from issuing a command to arriving at a planet and asking for information. The console output is also visible to illustrate the technical backend.

Challenges and Learnings

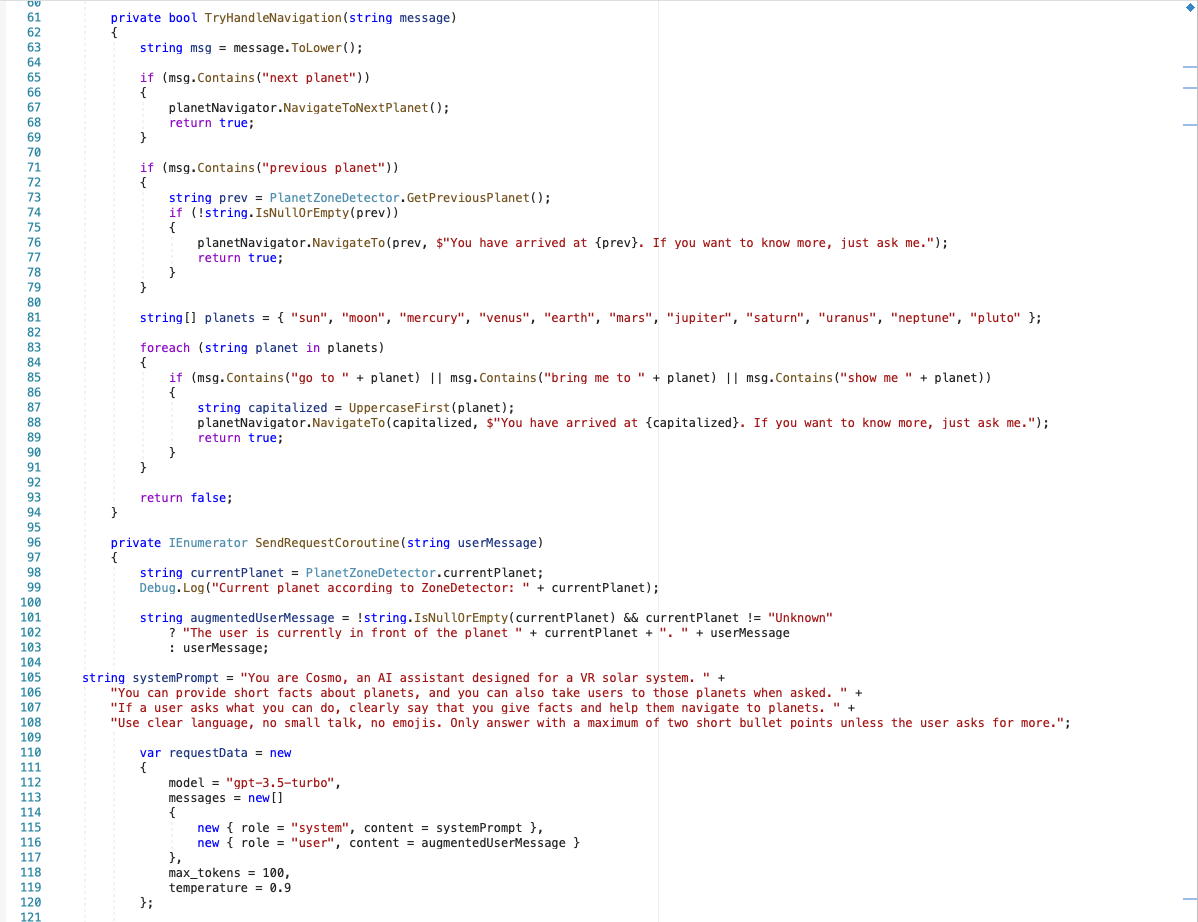

One of the biggest challenges was ensuring that navigation commands such as “bring me to the next planet” are correctly recognized and executed through the AI. It took a great deal of iteration to make this interaction reliable and natural. Because ChatGPT cannot execute commands itself, the logic had to be implemented in a way that bridges voice recognition, response parsing, and actual navigation in Unity.

Creating a seamless communication between multiple scripts was another complex task. The experience taught me how important clean architecture is when working with multiple interacting systems. This includes AI, animation, user interface and voice output.

I also learned how powerful Unity can be when it comes to experimenting with creative XR ideas. The project opened many doors in terms of what could still be added or improved. This includes more refined dialogue handling or integrating eye tracking or gesture controls in the future.

What I Gained

This was my first time building something this technical from scratch. I gained hands-on experience in working with APIs, developing AI prompts, and debugging complex system behaviors. I also realized how detailed and iterative this kind of work can be. Fixing one problem often causes a ripple effect that impacts other parts of the project.

Despite these challenges, I found voice navigation to be a particularly enjoyable aspect to work with. It is something that holds a lot of potential for future XR applications.

Future Vision

If continued, this project could be expanded with more intelligent interactions, additional content, and advanced sensing technologies. The modular structure makes it possible to build on what is already working and bring Cosmo even closer to a truly helpful and intuitive guide through immersive environments.